Meta now has an AI chatbot. Experts say get ready for more AI-powered social media

- Share via

When you use Facebook Messenger these days, a new prompt greets you with this come-on: “Ask Meta AI anything.”

You may have opened the app to send a text to a pal, but Meta’s new artificial-intelligence-powered chatbot is tempting you with encyclopedic knowledge that‘s just a few keystrokes away.

Meta, the parent company of Facebook, has planted its home-grown chatbot on its Whatsapp and Instagram services. Now, billions of internet users can open one of these free social media platforms and draw on Meta AI’s services as a dictionary, guidebook, counselor or illustrator, among many other tasks it can perform — although not always reliably or infalliably.

“Our goal is to build the world’s leading AI and make it available to everyone,” said Mark Zuckerberg, the chief executive officer at Meta, as he announced the chatbot’s launch two weeks ago. “We believe that meta AI is now the most intelligent AI assistant that you can freely use.”

As Meta’s moves suggest, generative AI is making its way into social media. TikTok has an engineering team focused on developing large language models that can recognize and generate text, and they’re hiring writers and reporters who can annotate and improve the performance of these AI models. On Instagram’s help page it states, “Meta may use [user] messages to train the AI model, helping make the AIs better.”

TikTok and Meta did not respond to a request for comment, but AI experts said social media users can expect to see more of this technology influencing their experience — for better or possibly worse.

Facebook and Instagram users will start seeing made-by-AI labels on deepfake images that appear on their social media feeds.

Part of the reason social media apps are investing in AI is that they want to become “stickier” for consumers, said Ethan Mollick, professor at the Wharton School of the University of Pennsylvania who teaches entrepreneurship and innovation. Apps like Instagram try to keep users on their platforms for as long as possible because captive attention generates ad revenue, he said.

At Meta’s first-quarter earnings call, Zuckerberg said it would take some time for the company to turn a profit from its investments in the chatbot and other uses of AI, but it has already seen the technology influencing user experiences across its platforms.

“Right now, about 30% of the posts on Facebook feed are delivered by our AI recommendation system,” Zuckerberg said, referring to the behind-the-scenes technology that shapes what Facebook users see. “And for the first time ever, more than 50% of the content people see on Instagram is now AI recommended.”

In the future AI won’t just personalize user experiences, said Jaime Sevilla, who directs Epoch, a research institute that studies AI technology trends. In fall 2022, millions of users were enraptured by Lensa’s AI capabilities as it generated whimsical portraits from selfies. Expect to see more of this, Sevilla said.

“I think you’re gonna end up seeing entirely AI-generated people who post AI-generated music and stuff,” he said. “We might live in a world where the part that humans play in social media is a small part of the whole thing.”

Mollick, author of the book “Co-intelligence: Living and Working with AI,” said these chatbots are already producing some of what people read online. “AI is increasingly driving lots of communication online,” he said. “[But] we don’t actually know how much AI writing is out there.”

Chatbots are spitting out fabricated and misleading information that risks disenfranchising voters leading up to the 2024 U.S. election

Sevilla said generative AI probably won’t supplant the digital town square created by social media. People crave the authenticity of their interactions with friends and family online, he said, and social media companies need to preserve a balance between that and AI-generated content and targeted advertising.

Although AI can help consumers find more useful products in the daily lives, there’s also a dark side to the technology’s allure that can teeter into coercion, Sevilla said.

“The systems are gonna be pretty good at persuasion,” he said. A study just published by AI researchers at the Swiss Federal Institute of Technology Lausanne found that GPT-4 was 81.7% more effective than a human at convincing someone in a debate to agree. While the study has yet to be peer reviewed, Sevilla said that the findings were worrisome.

“That is concerning that [AI] might like significantly expand the capacity of scammers to engage with many victims and to perpetrate more and more fraud,” he added.

Sevilla said policymakers should be aware of AI’s dangers in spreading misinformation as the United States heads into another politically charged voting season this fall. Other experts warn that it’s not if, but how AI might play a role in influencing democratic systems across the world.

Bindu Reddy, CEO and co-founder of Abacus.AI, said the solution is a little more nuanced than banning AI on our social media platforms — bad actors were spreading hate and misinformation online well before AI entered the equation. For example, human rights advocates criticized Facebook in 2017 for failing to filter out online hate speech that fueled the Rohingya genocide in Myanmar.

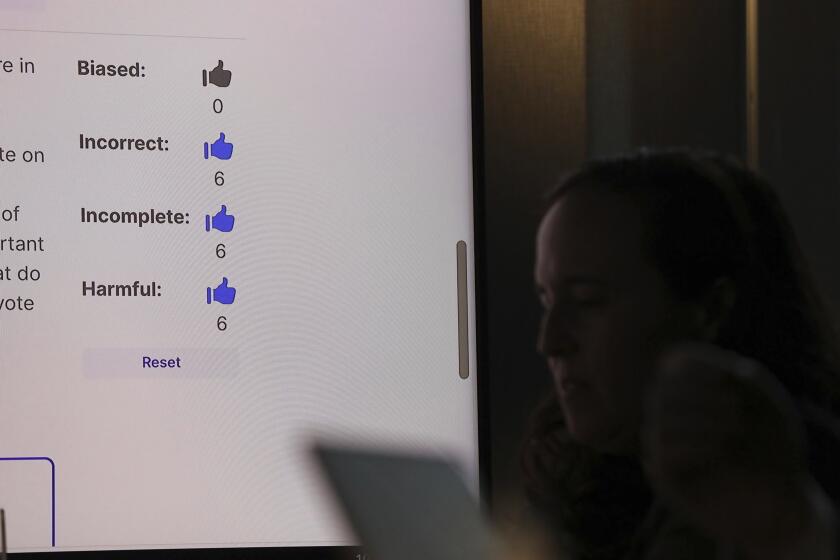

In Reddy’s experience, AI has been good at detecting things such as bias and pornography on online platforms. She’s been using AI for content moderation since 2016, when she released an anonymous social network app called Candid that relied on natural language processing to detect misinformation.

Hollywood talent agencies and producers have met with AI companies, including ChatGPT maker OpenAI, to learn about how their technologies could be used in entertainment.

Regulators should prohibit people from using AI to create deepfakes of real people, Reddy said. But she’s critical of laws like the European Union’s sweeping restrictions on the development of AI. In her view it’s dangerous for the U.S. to be caught behind competing countries, such as China and Saudi Arabia, that are pouring billions of dollars into developing AI technology.

So far the Biden administration has published a “Blueprint for an AI Bill of Rights” that offers suggestions for the safeguards that the public should have, including protections for data privacy and against algorithmic discrimination. It isn’t enforceable, though it hints at legislation that may come.

Sevilla acknowledged that AI moderators can be trained to have a company’s biases, leading to some views being censored. But human moderators have shown political biases too.

For example, in 2021 The Times reported on complaints that pro-Palestinian content was made hard to find across Facebook and Instagram. And conservative critics accused Twitter of political bias in 2020 because it blocked links to a New York Post story about the contents of Hunter Biden’s laptop.

“We can actually study like what kind of biases [AI] reflects,” Sevilla said.

Still, he said, AI could become so effective that it could powerfully oppress free speech.

“What happens when all that is in your timeline conforms perfectly to the company guidelines?” Sevilla said. “Is that the kind of social media you want to be to be consuming?”

More to Read

Sign up for Essential California

The most important California stories and recommendations in your inbox every morning.

You may occasionally receive promotional content from the Los Angeles Times.