Column: The air begins to leak out of the overinflated AI bubble

- Share via

You only need to go back three months or so to find the peak of the AI frenzy on Wall Street. That was on June 18, when shares of Nvidia, the Santa Clara company that dominates the market for AI-related hardware, peaked at $136.33.

Since then, shares of the maker of the high-grade computer chips that AI laboratories use to power the development of their chatbots and other products have come down by more than 22%.

That includes a drop of 9.5% Tuesday, which translated into a $279-billion decline in market value, the steepest one-day drop in value of any U.S. stock, ever.

Too much optimism and hype may lead to the premature use of technologies that are not ready for prime time.

— Daron Acemoglu, MIT

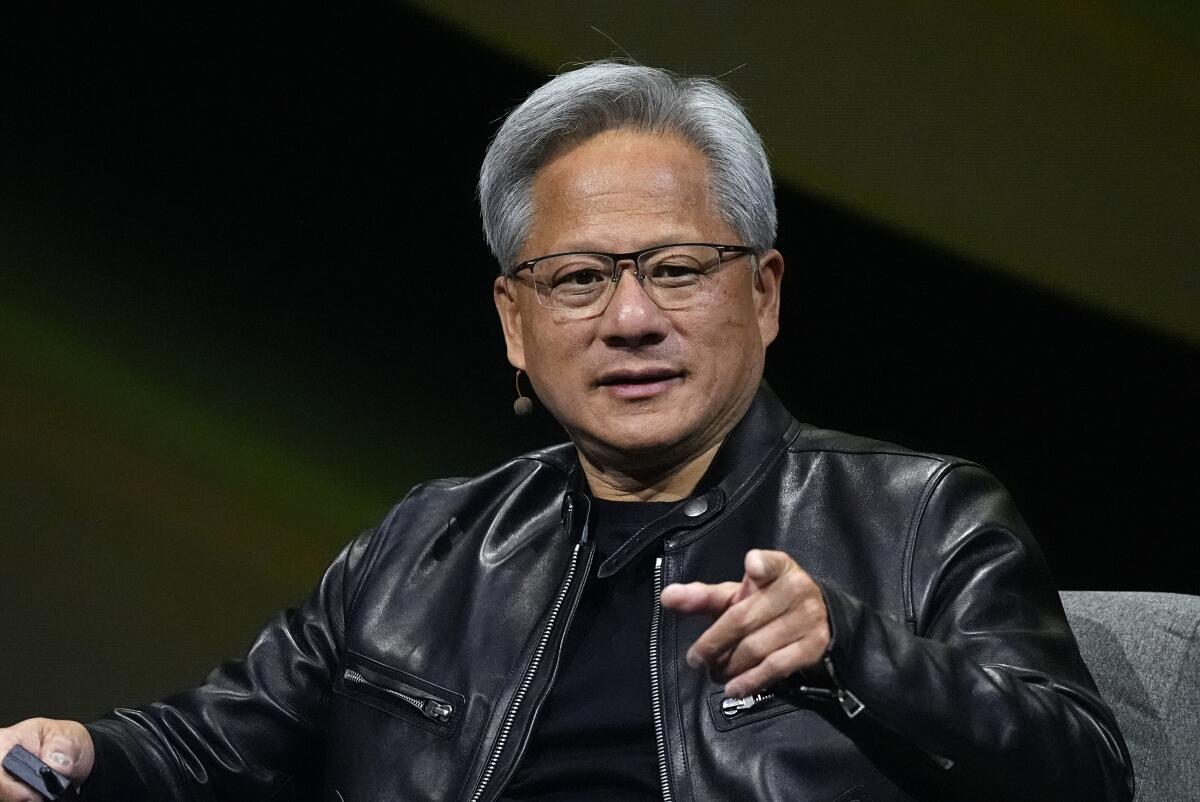

Shed a tear, if you wish, for Nvidia founder and Chief Executive Jensen Huang, whose fortune (on paper) fell by almost $10 billion that day. But pay closer attention to what the market action might be saying about the state of artificial intelligence as a hot technology.

It’s not pretty. Companies that plunged into the AI market for fear of missing out on useful new applications for their businesses have discovered that usefulness is elusive.

The most pressing questions in AI development, such as how to keep chatbots from making up responses to questions they can’t answer — “hallucinating,” as developers call it — haven’t been solved despite years of effort.

Get the latest from Michael Hiltzik

Commentary on economics and more from a Pulitzer Prize winner.

You may occasionally receive promotional content from the Los Angeles Times.

Indeed, some experts in the field report that the bots are getting dumber with every iteration. Even OpenAI, the leading developer of AI bots, acknowledged last year that on some tasks its GPT-4 chatbot’s performance is “worse” than its predecessors.

As for the prospect that AI will enable business users to do more with fewer humans in the office or on the factory floor, the technology generates such frequent errors that users may need to add workers just to double-check the bots’ output.

One CEO whose company uses AI to parse what executives promised investment analysts in earlier earnings calls told the Wall Street Journal that it was “60% right, 40% wrong.” (The system even got his name wrong.) He didn’t say so, but a system that churns out mistakes nearly half the time is plainly worthless.

Will self-driving cars and artificial intelligence take over the world? A distinguished technology expert says don’t hold your breath.

Business users have reason to be concerned about using AI bots without human oversight. Even on relatively straightforward tasks, such as spitting out a routine legal filing or answering simple customer service inquiries, AI has failed, sometimes spectacularly.

In a recent case, a marketing consultant used AI to generate a trailer for Francis Ford Coppola’s critically disdained new movie, “Megalopolis,” by printing critical pans of his earlier films including “The Godfather.”

Variety reported that the bot fabricated negative statements and attached them to the names of critics who had actually appreciated the earlier films. Last year a New Zealand grocery chain’s AI recipe bot advised users to combine bleach and ammonia for a thirst-quenching beverage. In fact, the combination is potentially deadly.

These are tasks on which the current AI models should excel — churned-out boilerplate, advertising copy, customer service information that can be obtained by pressing a button on your touch-tone phone.

That’s because bots are developed by being fed unimaginably large quantities of published works, internet posts, and other mostly generic written material. Developers then apply algorithms that allow the bots to emit responses that resemble human language, but are based on the probabilities that a given word should follow another — one reason that the bot action is often dismissed as “autocomplete on steroids.”

These systems, however, aren’t “intelligent” in any sense of the term. They produce simulacrums of cogent thought, but often underperform when asked to perform tasks requiring human levels of discernment — for example, in medical diagnosis and treatment.

AI investors say their work is so important that they should be able to trample copyright law on their pathway to riches. Here’s why you shouldn’t believe them.

In a British study published in July of 1,020 mammogram results assessed by both human experts and an AI system, the humans found 18 breast cancers missed by the AI system, and the AI system found only two missed by the humans. “By 2024, shouldn’t AI/machine learning do better?” asked Michael Cembalest, the chief market strategist at JPMorgan Asset Management, in an analysis of the AI market this month.

Other studies suggest that AI can be helpful in diagnosing medical conditions, but only when used as a technological tool under the supervision of human physicians. Diagnosis and treatment of patients require “emotional intelligence and moral agency, attributes that AI may be able to mimic but never truly possess,” bioethicists at Yale and Cornell wrote earlier this month.

One persistent concern about AI is its potential for misuse for nefarious ends, such as making it easier to shut down an electric grid, melt down the financial system, or produce deepfakes to deceive consumers or voters. That’s the topic of Senate Bill 1047, a California measure awaiting the signature of Gov. Gavin Newsom (who hasn’t said whether he’ll approve it).

The bill mandates safety testing of advanced AI models and the imposition of “guardrails” to ensure they can’t slip out of the control of their developers or users and can’t be employed to create “biological, chemical, and nuclear weapons, as well as weapons with cyber-offensive capabilities.” It’s been endorsed by some AI developers but condemned by others who assert that its constraints will drive AI developers out of California.

Meta’s LLaMA chatbot was trained on a database of 200,000 books, including mine, without pay. How should authors like me think about that?

It’s true that some of the contemplated risks seem unlikely in the foreseeable future; its sponsor, state Sen. Scott Wiener (D-San Francisco), says it has been drafted to cover more than distant eventualities.

“The focus of this bill is how these models are going to be used in the near future,” Wiener told me. “The opposition routinely tries to disparage the bill by saying it’s all about science-fiction and ‘Terminator’ risks. But we’re focused on very real-world risks that most people can envision and that are not futuristic.”

That brings us to doubts not about AI risks, but about its real-world utility for business. These have been spreading in industry as more businesses try to use it, and find that it has been oversold. A survey by Boston Consulting Group found last year, for instance, that “for business problem solving,” using the most advanced version of OpenAI’s GPT chatbot “resulted in performance that was 23% lower than that of the control group.”

As the consultants noted, “it isn’t obvious when the new technology is (or is not) a good fit, and the persuasive abilities of the tool make it hard to spot a mismatch. ... Even participants who were warned about the possibility of wrong answers from the tool did not challenge its output.”

Some investment analysts say that so much has been invested in AI that the big developers such as Microsoft, Meta and Google may not see returns for years, if ever. In coming years, Goldman Sachs analysts reported in June, “tech giants and beyond are set to spend over $1 trillion on AI ... with so far little to show for it. So, will this large spend ever pay off?”

Nvidia’s dominance of the market for AI hardware raises questions about the effect on the financial markets if the firm stumbles financially or technologically.

JP Morgan’s Cembalest titled his analysis of the market’s future “A severe case of COVIDIA.” AI is “driving the [venture capital] ecosystem,” he noted, producing more than 40% of new “unicorns” (startups worth $1 billion or more) in the first half of this year and more than 60% of the increases in valuations of venture-backed startups.

The instability this imposes on investment markets was visible Tuesday, when Nvidia’s downdraft helped to bring the Nasdaq composite index down by more than 577 points, or 3.26%.

Column: Artificial intelligence chatbots are spreading fast, but hype about them is spreading faster

Will artificial intelligence make jobs obsolete and lead to humankind’s extinction? Not on your life

Nvidia’s decline was fueled by projections of a slowdown in its growth, which up to now has been spectacular, as well as a report that federal regulators had issued the company a subpoena related to antitrust concerns. (Nvidia later denied receiving a subpoena.) The broad market also declined, in part because of signs of a slowdown in U.S. job growth.

Others also have warned that the AI frenzy has played out or that the technology’s potential has been hyped.

“At the very peak of inflated expectations in finance is generative AI,” the business technology consultancy Gartner warned last month. “AI tools have generated enormous publicity for the technology in the last two years, but as finance functions adopt this technology, they may not find it as transformative as expected.”

That may be true of projected economic gains from AI more broadly. In a recent paper, MIT economist Daron Acemoglu forecast that AI would produce an increase of only about 0.5% in U.S. productivity and an increase of about 1% in gross domestic product over the next 10 years, mere fractions of standard economic projections.

In an interview for the Goldman Sachs report, Acemoglu observed that the potential social costs of AI seldom are counted by economic prognosticators.

“Technology that has the potential to provide good information can also provide bad information and be misused,” he said. “A trillion dollars of investment in deepfakes would add a trillion dollars to GDP, but I don’t think most people would be happy about that or benefit from it. ... Too much optimism and hype may lead to the premature use of technologies that are not ready for prime time.”

Hype remains the defining feature of discussions of the future of AI today, most of it emanating from AI firms such as OpenAI and their corporate sponsors, including Microsoft and Google.

The vision of a world remade by this seemingly magical technology has attracted investments measured in the hundreds of billions of dollars. But if it all evaporates in a flash because the vision has proved to be cloudy, that wouldn’t be a surprise. It wouldn’t be the first time such a thing happened, and surely won’t be the last.

More to Read

Get the latest from Michael Hiltzik

Commentary on economics and more from a Pulitzer Prize winner.

You may occasionally receive promotional content from the Los Angeles Times.